时间序列研究组研究生朱思颖同学,在人工智能领域国际顶级期刊TNNLS《IEEE Transactions on Neural Networks and Learning Systems》上发表题为MR-Transformer: Multi-Resolution Transformer for Multivariate Time Series Prediction的学术论文。

论文摘要:Multivariate Time Series (MTS) prediction has been studied broadly, which is widely applied in real-world applications. Recently, Transformer-based methods have shown the potential in this task for their strong sequence modeling ability. Despite progress, these methods pay little attention to extracting short-term information in the context, while short-term patterns play an essential role in reflecting local temporal dynamics. Moreover, we argue that there are both consistent and specific characteristics among multiple variables, which should be fully considered for multivariate time series modeling. To this end, we propose a Multi-Resolution Transformer (MR-Transformer) for MTS prediction, modeling multivariate time series from both the temporal and the variable resolution. Specifically, for the temporal resolution, we design a long short-term Transformer. We first split the sequence into non-overlapping segments in an adaptive way and then extract short-term patterns within segments, while long-term patterns are captured by the inherent attention mechanism. Both of them are aggregated together to capture the temporal dependencies. For the variable resolution, besides the variable-consistent features learned by long short-term Transformer, we also design a temporal convolution module to capture the specific features of each variable individually. MR-Transformer enhances the multivariate time series modeling ability by combining multi- resolution features between both time steps and variables. Extensive experiments conducted on real-world time series datasets show that MR-Transformer significantly outperforms the state-of-the-art MTS prediction models. The visualization analysis also demonstrates the effectiveness of the proposed model.

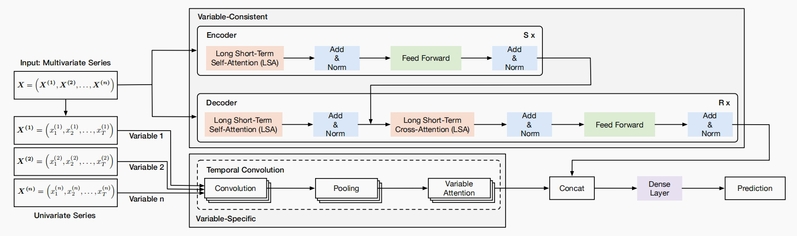

论文模型图:

论文链接:![]() MR-Transformer Multi-Resolution Transformer for Multivariate Time Series Prediction.pdf

MR-Transformer Multi-Resolution Transformer for Multivariate Time Series Prediction.pdf