NeurlPS2023不久前放榜,时间序列研究组博士生柳真同学的论文在本次12343篇论文投稿中脱颖而出,成功被录用。NeurlPS会议作为人工智能和机器学习领域享有盛誉的顶级会议,由于其业内的认可度高,一直以来深受科研工作者们投稿的青睐。根据官方信息,本次会议主赛道的录稿率为26.1%。

论文标题:《Scale-teaching: Robust Multi-scale Training for Time Series Classification with Noisy Labels》

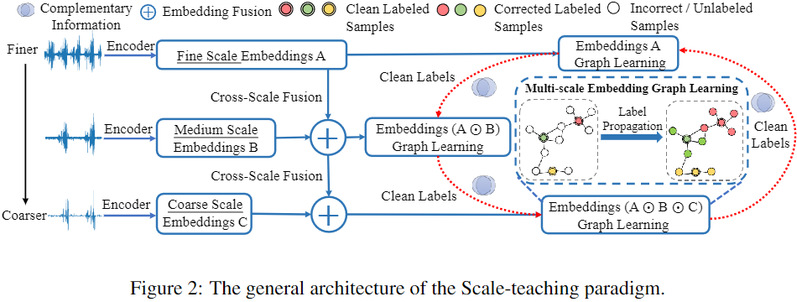

论文摘要:Deep Neural Networks (DNNs) have been criticized because they easily overfit noisy (incorrect) labels.To improve the robustness of DNNs, existing methods for image data regard samples with small training losses as correctly labeled data (small-loss criterion).Nevertheless, time series' discriminative patterns are easily distorted by external noises (i.e., frequency perturbations) during the recording process. This results in training losses of some time series samples that do not meet the small-loss criterion.Therefore, this paper proposes a deep learning paradigm called Scale-teaching to cope with time series noisy labels.Specifically, we design a fine-to-coarse cross-scale fusion mechanism for learning discriminative patterns by utilizing time series at different scales to train multiple DNNs simultaneously.Meanwhile, each network is trained in a cross-teaching manner by using complementary information from different scales to select small-loss samples as clean labels.For unselected large-loss samples, we introduce multi-scale embedding graph learning via label propagation to correct their labels by using selected clean samples.Experiments on multiple benchmark time series datasets demonstrate the superiority of the proposed Scale-teaching paradigm over state-of-the-art methods in terms of effectiveness and robustness.

论文模型图:

论文链接:![]() NeurIPS2023_Scale_teaching_camera_ready.pdf

NeurIPS2023_Scale_teaching_camera_ready.pdf